44 label encoding vs one hot encoding

One hot encoding vs label encoding (Updated 2022) - Stephen Allwright That answer depends very much on your context, however given that One Hot Encoding is possible to use across all machine learning models whilst the Label Encoding tends to only work best on tree based models, I would always suggest to start with One Hot Encoding and look at Label Encoding if you see a specific need. Multi-Label Classification with Deep Learning Aug 30, 2020 · 3- Two of my label columns have categorical type, and for scaling my dataset I use z_score and I encoding my these 2 column with ordinal encoding, because their value were too different . and on-hot encoding isn’t good for them. Is it correct? P.S The article reference doesn’t split dataset to train-test. Thank you very much if you answer me.

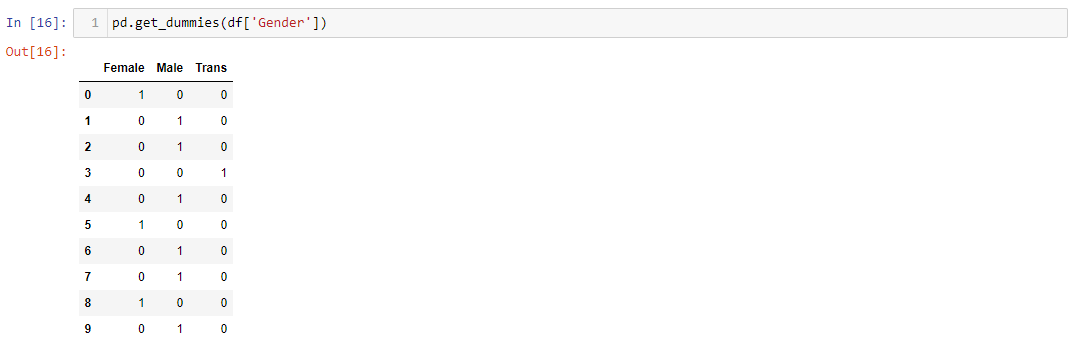

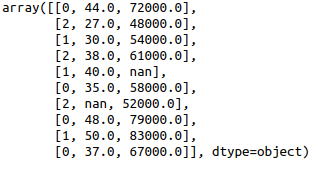

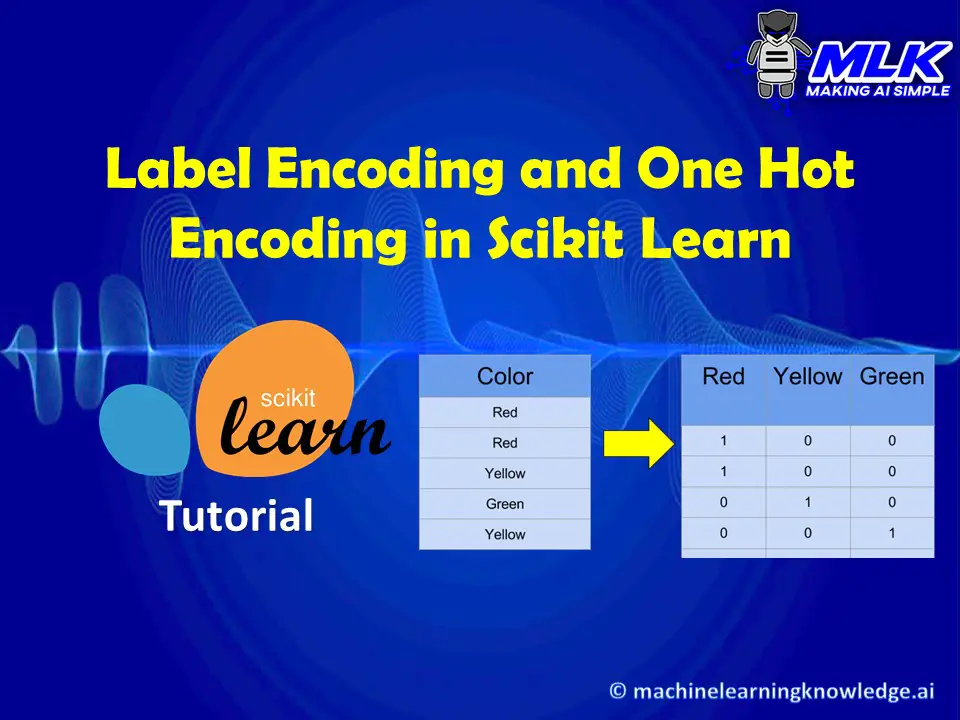

Encoding Categorical Variables: One-hot vs Dummy Encoding Both one-hot and dummy encoding can be implemented in Scikit-learn by using its OneHotEncoder function. from sklearn.preprocessing import OneHotEncoder ohe = OneHotEncoder(categories, drop, sparse) encoded_data = ohe.fit_transform (original_data) #Returns a NumPy array of encoded data

Label encoding vs one hot encoding

Comparing Label Encoding And One-Hot Encoding With Python Implementation Here, by comparing the accuracy scores of the two encoder techniques, we can see that the accuracy score of the label encoder is less than the accuracy of the one-hot encoder. Outlook Most of the time, the outcome of a machine learning model is represented by how much accuracy rate the model is providing. Choosing the right Encoding method-Label vs OneHot Encoder RMSE of One Hot Encoder is less than Label Encoder which means using One Hot encoder has given better accuracy as we know closer the RMSE to 0 better the accuracy, again don't be worried for such a large RMSE as I said this is just a sample data which has helped us to understand the impact of Label and OneHot encoder on our model. One Hot Encoding VS Label Encoding | by Prasant Kumar | Medium There we use Label Encoders for encoding because they replace them with labels that are comparable with each other. Taking the example of Satisfaction rating replacing "extremely dislike"- 0,...

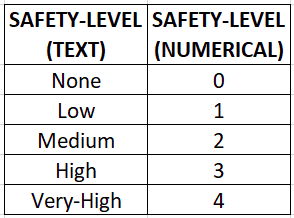

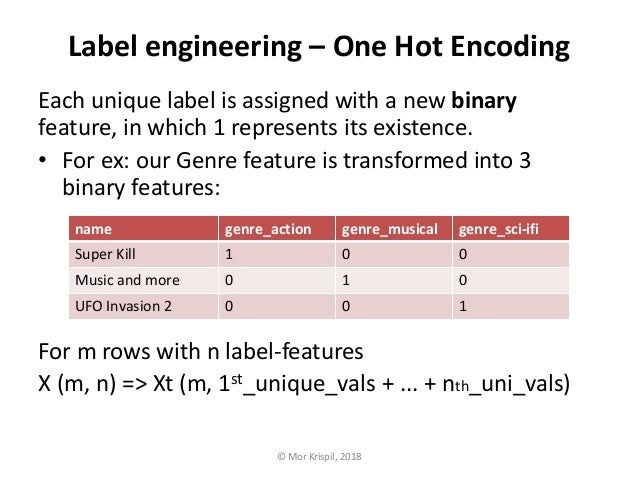

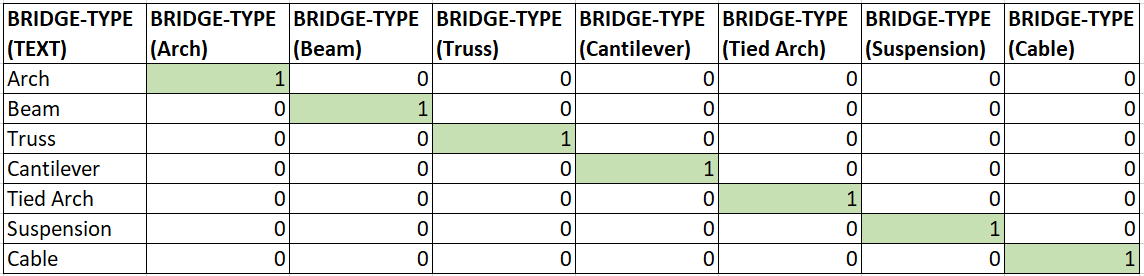

Label encoding vs one hot encoding. Label Encoding vs. One Hot Encoding | Data Science and Machine Learning ... One-Hot Encoding One-Hot Encoding transforms each categorical feature with n possible values into n binary features, with only one active. Most of the ML algorithms either learn a single weight for each feature or it computes distance between the samples. Algorithms like linear models (such as logistic regression) belongs to the first category. Feature Engineering: Label Encoding & One-Hot Encoding - Fizzy The categorical data are often requires a certain transformation technique if we want to include them, namely Label Encoding and One-Hot Encoding. Label Encoding. What the Label Encoding does is transform text values to unique numeric representations. For example, 2 categorical columns "gender" and "city" were converted to numeric values, a ... When to use One Hot Encoding vs LabelEncoder vs DictVectorizor? Still there are algorithms like decision trees and random forests that can work with categorical variables just fine and LabelEncoder can be used to store values using less disk space. One-Hot-Encoding has the advantage that the result is binary rather than ordinal and that everything sits in an orthogonal vector space. Label Encoding vs One Hot Encoding | by Hasan Ersan YAĞCI - Medium Label Encoding and One Hot Encoding 1 — Label Encoding Label encoding is mostly suitable for ordinal data. Because we give numbers to each unique value in the data. If we use label encoding in...

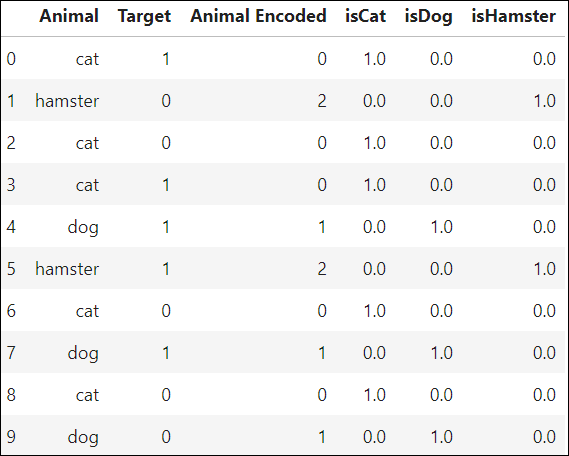

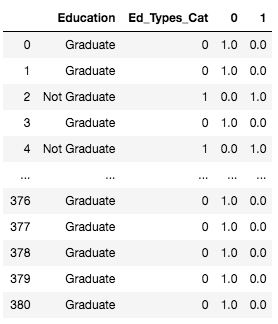

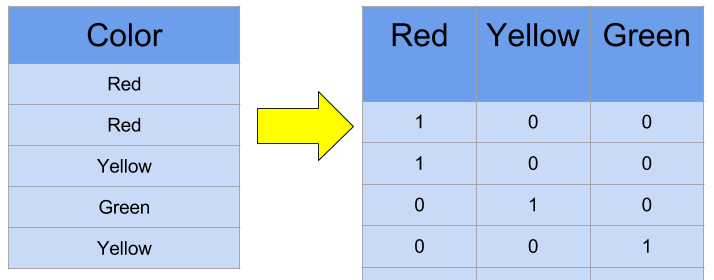

Difference between Label Encoding and One-Hot Encoding | Pre-processing ... In one hot encoding, each label is converted to an attribute and the particular attribute is given values 0 (False) or 1 (True). For example, consider a gender column having values Male or M and Female or F. After one-hot encoding is converted into two separate attributes (columns) as Male and Female. What is the difference between one-hot and dummy encoding? With one-hot encoding ( x 1, x 2, x 3, x 4), this average weight is a linear function of the encoding: 200 x 1 + 150 x 2 + 30 x 3 + 100 x 4. This is something a regression can figure out from data. With the 0, 1, 2, 3 encoding, there's no nice function that will give you the average weight of a fruit given its number. Data Science in 5 Minutes: What is One Hot Encoding? What is one hot encoding? Categorical data refers to variables that are made up of label values, for example, a "color" variable could have the values "red", "blue, and "green".Think of values like different categories that sometimes have a natural ordering to them.. Some machine learning algorithms can work directly with categorical data depending on implementation, such as a ... Machine learning feature engineering: Label encoding Vs One-Hot ... In this tutorial, you will learn how to apply Label encoding & One-hot encoding using Scikit-learn and pandas. Encoding is a method to convert categorical va...

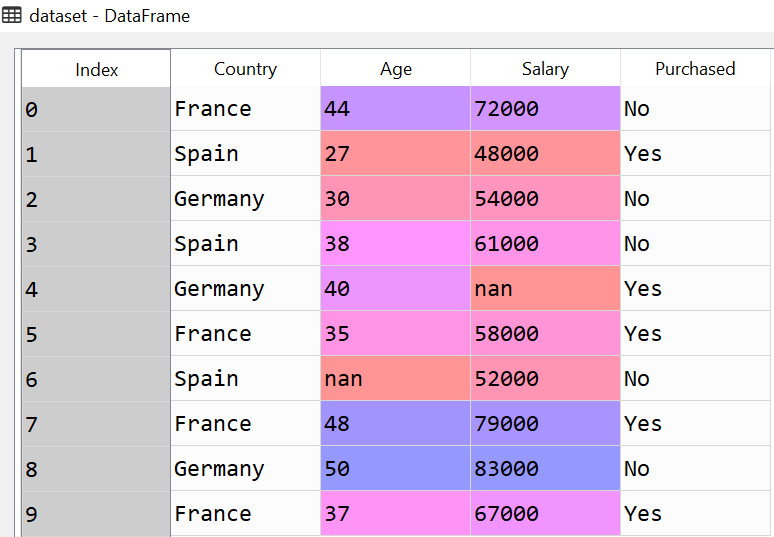

Label Encoding vs. One Hot Encoding: What's the Difference? One Hot Encoding In most scenarios, one hot encoding is the preferred way to convert a categorical variable into a numeric variable because label encoding makes it seem that there is a ranking between values. For example, consider when we used label encoding to convert team into a numeric variable: Categorical Encoding | One Hot Encoding vs Label Encoding The number of categorical features is less so one-hot encoding can be effectively applied. We apply Label Encoding when: The categorical feature is ordinal (like Jr. kg, Sr. kg, Primary school, high school) The number of categories is quite large as one-hot encoding can lead to high memory consumption. Label Encoder vs One Hot Encoder in Machine Learning [2022] - upGrad blog One hot encoding takes a section which has categorical data, which has an existing label encoded and then divides the section into numerous sections. The volumes are rebuilt by 1s and 0s, counting on which section has what value. The one-hot encoder does not approve 1-D arrays. The input should always be a 2-D array. Label Encoder vs. One Hot Encoder in Machine Learning Jul 30, 2018 · What one hot encoding does is, it takes a column which has categorical data, which has been label encoded, and then splits the column into multiple columns. The numbers are replaced by 1s and 0s, depending on which column has what value. In our example, we’ll get three new columns, one for each country — France, Germany, and Spain.

Xgboost with Different Categorical Encoding Methods In this study, xgboost with target and label encoding methods had better performance on class 0, 1, and 2, and xgboost with one hot and entity embedding methods had better performance on class 0 and 4. Xgboost with one hot encoding and entity embedding can lead to similar model performance results.

What are the pros and cons of label encoding categorical ... - Quora What is one hot encoding used in machine learning? It is very simple and one can understand it as follows. Let's we have three colors, 'red', 'green' and 'blue'. We will first convert these to integers. For example, red->1, green->2 and blue->3. In one hot encoding, each word is represented by a vector of the same length as other samples.

Ordinal and One-Hot Encodings for Categorical Data Encoding Categorical Data There are three common approaches for converting ordinal and categorical variables to numerical values. They are: Ordinal Encoding One-Hot Encoding Dummy Variable Encoding Let's take a closer look at each in turn. Ordinal Encoding In ordinal encoding, each unique category value is assigned an integer value.

Target Encoding Vs. One-hot Encoding with Simple Examples Label Encode (give a number value to each category, i.e. cat = 0) — shown in the 'Animal Encoded' column in Table 3. ... One-hot encoding works well with nominal data and eliminates any ...

The Difference between One Hot Encoding and LabelEncoder? There you go, you overcome the LabelEncoder problem, and you also get 4 feature columns instead of 8 unlike one hot encoding. This is the basic intuition behind Binary Encoder. **PS:** Give 2 power 11 is 2048 and you have 2000 categories for zipcodes, you can reduce your feature columns to 11 instead of 1999 in the case of one hot encoding! Share

Categorical Data Encoding with Sklearn LabelEncoder and ... - MLK Label Encoding vs One Hot Encoding. Label encoding may look intuitive to us humans but machine learning algorithms can misinterpret it by assuming they have an ordinal ranking. In the below example, Apple has an encoding of 1 and Brocolli has encoding 3. But it does not mean Brocolli is higher than Apple however it does misleads the ML algorithm.

regression - Label encoding vs Dummy variable/one hot encoding ... 1 Answer. It seems that "label encoding" just means using numbers for labels in a numerical vector. This is close to what is called a factor in R. If you should use such label encoding do not depend on the number of unique levels, it depends on the nature of the variable (and to some extent on software and model/method to be used.) Coding ...

When to Use One-Hot Encoding in Deep Learning? - Analytics India Magazine One hot encoding is a highly essential part of the feature engineering process in training for learning techniques. For example, we had our variables like colors and the labels were "red," "green," and "blue," we could encode each of these labels as a three-element binary vector as Red: [1, 0, 0], Green: [0, 1, 0], Blue: [0, 0, 1].

Difference between Label Encoding and One Hot Encoding - H2S Media Conclusion Use Label Encoding when you have ordinal features present in your data to get higher accuracy and also when there are too many categorical features present in your data because in such scenarios One Hot Encoding may perform poorly due to high memory consumption while creating the dummy variables.

What exactly is multi-hot encoding and how is it different from one-hot ... If you would use one-hot-encoding you would represent the presence of 'dog' in a five-dimensional binary vector like [0,1,0,0,0]. If you would use multi-hot-encoding you would first label-encode your classes, thus having only a single number which represents the presence of a class (e.g. 1 for 'dog') and then convert the numerical labels to ...

label encoding vs one hot encoding | Data Science and Machine Learning ... label encoding vs one hot encoding.

One Hot Encoding VS Label Encoding | by Prasant Kumar | Medium There we use Label Encoders for encoding because they replace them with labels that are comparable with each other. Taking the example of Satisfaction rating replacing "extremely dislike"- 0,...

Choosing the right Encoding method-Label vs OneHot Encoder RMSE of One Hot Encoder is less than Label Encoder which means using One Hot encoder has given better accuracy as we know closer the RMSE to 0 better the accuracy, again don't be worried for such a large RMSE as I said this is just a sample data which has helped us to understand the impact of Label and OneHot encoder on our model.

Comparing Label Encoding And One-Hot Encoding With Python Implementation Here, by comparing the accuracy scores of the two encoder techniques, we can see that the accuracy score of the label encoder is less than the accuracy of the one-hot encoder. Outlook Most of the time, the outcome of a machine learning model is represented by how much accuracy rate the model is providing.

Post a Comment for "44 label encoding vs one hot encoding"